Recently, we’ve been talking a lot about how Supportability Engineering has failed to deliver on its promises. Is the subject flawed, or are the techniques and capabilities required to deliver real support only just coming to light?

Our Chief Executive, Peter Stuttard, touched upon a point around how Integrated Logistics Support (ILS) and Logistics Support Analysis (LSA) are seen by many as effective ways of burning budgets, and not much else. This, of course, is not the fault of the subject itself, but the lack of understanding and investment during the development stage, and exacerbated by a continued lack of interest and investment once systems are put into operation.

Support is not “design once, run everywhere” – it must evolve and adapt to reflect new information and changing environments, operational requirements and threats.

So how do we evolve the support system, and how do we design support so that it can take account of these unknowns?

Modelling and Analytics are key capabilities in the “Supportability” arsenal, allowing us to anticipate and to be proactive, to be prepared for future problems.

What is Modelling?

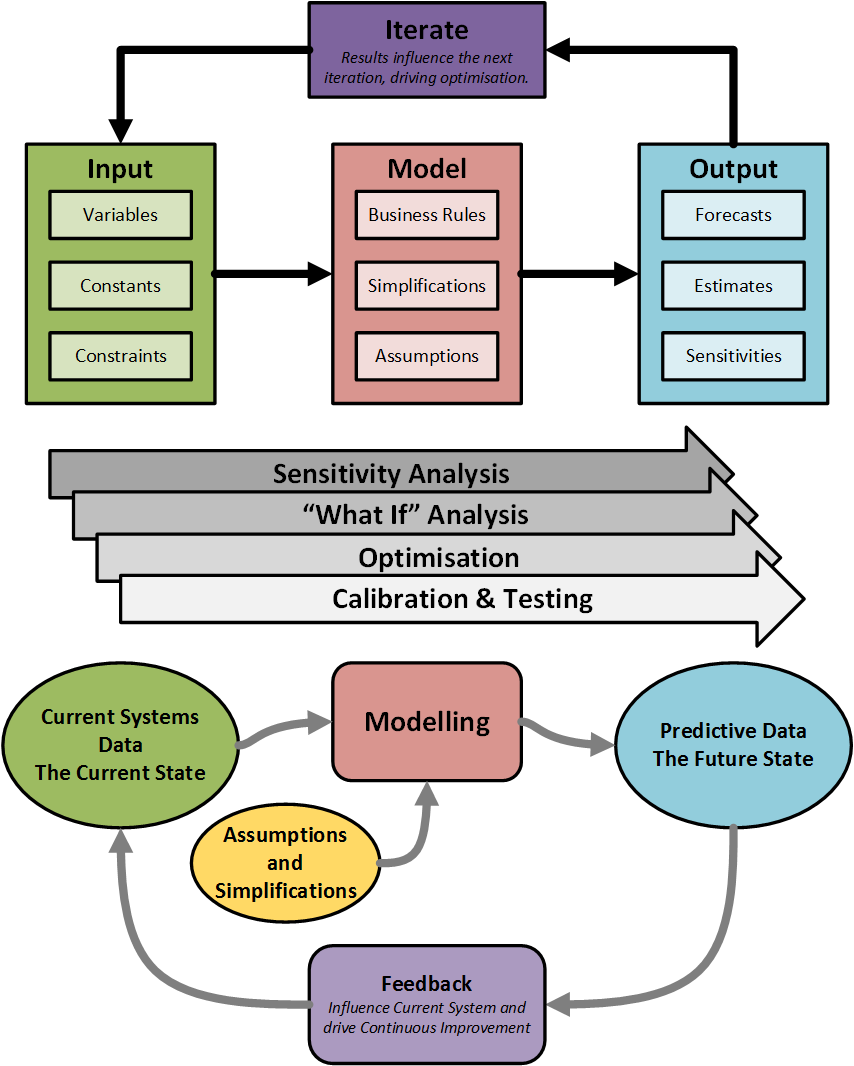

A model is simply a representation of a system; usually software which performs calculations and outputs some data, which predicts how that system may behave in a particular set of circumstances. Consider a model as a glorified sand pit in which we can examine alternative scenarios and ask “what if…?”

What is Analytics?

In the simplest of terms, analytics is just “using models”. We gather data (either real or hypothetical), plug it into our model, and use the results to inform our thinking. This last part is the key (and often forgotten) aspect of analytics: analytics is about using information (both data and models) to inform and influence – i.e. to inform the decision making process.

So, what does this mean for Supportability Engineering?

Consider helicopter platforms operated by the Navy. Such platforms often carry out multiple roles (freight, troop movement, surveillance, search and rescue…) and can remain in service for upwards of 30 years. They operate from a variety of locations, sometimes from bases within the UK, other times operating from the back of a ship for months at a time. Indeed, over that 30+ years of service the roles it carries out, and the environments it is operated in, can change many times. How can we possibly determine an optimal system to support such a platform when the goalposts keep moving? The answer, of course, lies in the effective use of complex system models.

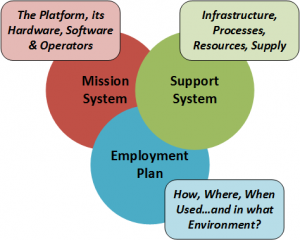

We cannot provide effective and agile support without first having effective and agile models. By modelling the whole system (the Mission System, Support System and Employment Plan) we allow analysis of the complex interactions between its component parts.

This is why support often fails. Failing to understand that the way we use the platform (the Employment Plan) influences the effectiveness and efficiency of support leads to a platform that can’t operate as it should. There is no “one size fits all” for a complex system.

By modelling at this level we can “plug and play” a wide range of alternative solutions and a wide range of operational scenarios, and see how they interact. More importantly, this can be done, affordably, quickly and in a safe environment. Rather than wait for it to happen in real life (too late), or conduct exercises to emulate the possibilities (too expensive), we use models as a playground to ask all of these “what-ifs”.

The Mission System – What if we change the design? What are the effects of aging? What if we make modifications, or change the role?

The Employment Plan – What if we have to use it in a new environment? What if there is a surge in demand requiring operation for longer and with higher intensity, or if we suddenly have to operate from the other side of the world?

The Support System – What happens if we buy more spares? What if there is a shortage of suitably qualified maintenance manpower. What would be the impact of investing in some smart new diagnostics equipment? What if our supply chain is cut off?

What if there is a combination of two or more of these changes?

This all sounds obvious: modelling and analytics are relatively quick and relatively cheap ways to deliver effective systems. So what is holding us back from doing more of it? While we all like to think of ourselves as logical and rational thinkers, still a great deal of decision making is based on instinct and “gut-feel” rather than being based on the available evidence.

These behaviours are exacerbated by a tendency of us on the analytical side to present “black-box” solutions: delivering models that may provide accurate results, without taking the effort to convey how or why they work. One of our last articles stated that “ILS needs better PR” but so too does analytics. How can we expect people to believe our predictions and forecasts if we do not explain how we arrived at them?

Analytics Professionals – we must aim to provide not just models, and not just answers, but to ensure that stakeholders understand and believe in these answers.

Those of us in the wider supportability engineering domain, managers and stakeholders – we must strive to ensure that our decisions (and those of our organisation) are based on evidence, not instinct.

If you would like to understand more about modelling, analytics and decision support, along with their place within supportability engineering, then check out our recent webinar.

“Decision Theory for Engineering Support – where should we invest: spares, repair or logistics?”